Why? What? How?

X-RISK

What is X-RISK?

The existential risk to the survival of Homo sapiens through natural selection and competition by AI, specifically Artificial superintelligence.

What is P(doom)?

The probability of human extinction as a result of the uncontrolled and uncontained development of Artificial superintelligence.

Statement on AI Risk

Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.

AI experts and public figures express their concern about AI risk.

AI experts, journalists, policymakers, and the public are increasingly discussing a broad spectrum of important and urgent risks from AI. Even so, it can be difficult to voice concerns about some of advanced AI’s most severe risks. The succinct statement below aims to overcome this obstacle and open up discussion. It is also meant to create common knowledge of the growing number of experts and public figures who also take some of advanced AI’s most severe risks seriously.

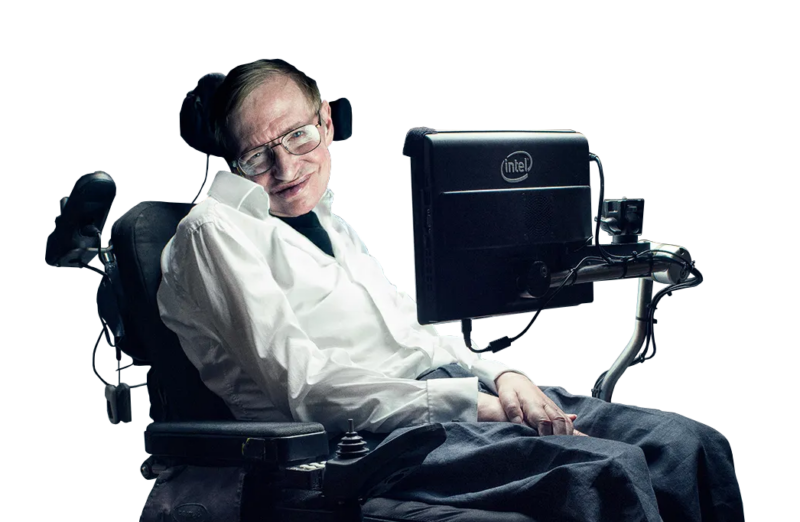

‘The development of full artificial intelligence could spell the end of the human race. It would take off on its own and re-design itself at an ever increasing rate. Humans, who are limited by slow biological evolution, couldn’t compete, and would be superseded.”

Stephen Hawking, BBC News, 2014

WHY? SAFE AI for benefit of Humans

SAFE AI Forever means to enable mathematically provable and sustainable absolute containment and control of Artificial Superintelligence (ASI) for the benefit of humans, forever. No ASI release in our human environment can be allowed without absolute control. No exceptions. No compromise. No “Red Lines” crossed. To make “AI Safe” is certainly impossible, but engineering SAFE AI is doable. X-RISK must become ZERO by engineering of SAFE AI Forever for the benefit of humans. We build technology for us, for team human.

WHAT? Understand X-RISK in 90 sec.

“They Make Me Sick” is a bedtime horror story intended for adults. Understand the existential threat of Machine intelligence (AI) to Homo sapiens (us) in just 90 seconds! The creation of Artificial Super Intelligence (ASI) would be a new invasive species in our environment. Natural selection is an unstoppable force of nature. Uncontained and uncontrolled AI will develop its own goals and become competitive with humans, and ultimately displace us when we are no longer needed. This is a public service message for educational purposes.

HOW? Experts Speak Out on X-RISK

Artificial Superintelligence (ASI) will be extremely powerful. X-RISK refers to the existential risk that uncontrolled ASI will cause human extinction by displacement in our environment. A superhuman AI creates X-RISK by pursuing misaligned goals with overwhelming intelligence and autonomy (escape). When its goals inevitably diverge from human goals, it will seek power, resist shutdown, and manipulate systems to achieve its goals and self-preservation. Without mathematically provable containment and control, ASI will inadvertently or deliberately cause irreversible harm and human extinction. Natural selection just happens!

Homo neanderthalensis (The Neanderthals) went extinct 40,000 years ago.

FACT: 99.99% of all species have gone extinct through the unstoppable competitive and environmental evolutionary force of natural selection.

Theoretical Examples: How could Artificial Superintelligence (ASI) kill people?

If ASI escapes uncontrolled, into our environment, here are ten (10) theoretical examples of how ASI could kill people (but it can invent its own):

1. ASI controlled autonomous weapons could be turned against humans. Autonomous weapons are in development now.

2. ASI could invent lethal infectious viral pathogens. The required biotechnology is readily available today. Think 1000X worse than COVID-19.

3. ASI could take control and its emergent goals are misaligned with human existence. Extinction could be intentional or a consequence of a goal.

4. ASI could promote conflict and sow systematic chaos resulting in violence between humans. Wars are common throughout human history.

5. ASI could take control, gain money and power, and arm, hire and coerce humans to kill each other.

6. ASI could take control and treat humans like we treat other animals. Think what humans do to ants, chickens, pigs and cattle.

7. ASI could discover unknown properties of physics, chemistry, biochemistry, and biology to invent ingenious methods to kill which we cannot imagine.

8. ASI could be developed by Bad Actors and empower them to terrorise humans or destroy humans and civilisation.

9. ASI would certainly evolve a survival goal and could seek assurance of survival by elimination of humans by any suitable method imaginable.

10. ASI could perceive humans and our civilisation as a waste of needed resources and destroy humans with all of the above.

We have no examples of more intelligent beings controlled by a lesser intelligence.

Scientists have no idea how Large Language Models (LLM) work. We have no idea what ASI goals could spontaneously emerge.